The debate over enterprise AI is changing from raw capability to skillful application. Now, a new strategy is emerging in response, and it already shows early signs of success. Instead of asking AI for answers, some leaders are training it to ask questions of them. Because when the machine becomes a thinking partner rather than an instant-answer engine, it can become a catalyst for human expertise.

For an insider's perspective, we spoke with Emily Ryder Martins, Chief Data and Analytics Officer for Australian healthcare provider St John of God Health Care. Having spent her career in the highly regulated sectors with firms like Bupa, Medibank, and EY, Martins specializes in making healthcare innovation safer. Today, her challenge is to implement generative AI in an environment where mistakes have real human consequences.

For Martins, that process typically starts with a conversation. The primary reason to adopt a human-centric approach is the tangible limitations of current models, she explains. But in healthcare, the risk isn't just clinical inaccuracy. It also includes the human cost.

If fast is false: As an example, Martins describes the potential consequences of sending a healthy person down a stressful and unnecessary treatment path. "In a recent case, ChatGPT was used to identify diabetic retinopathy. It was only 70-75% accurate compared to 90% for doctors, but the real issue was the high number of false positives. It was identifying the disease where it didn't exist. From a patient's perspective, that's a huge problem. You don't want to go down a significant treatment pathway for a condition you don't have. AI can be a starting point, but it absolutely must be augmented with professional judgment. We're just not there yet for high-risk clinical decisions."

The solution is a technique Martins calls “reverse prompting.” Instead of asking the AI for an output, the user prompts it to ask questions. For example, during a problem-solving session with a hospital team, she describes using it to break through the overwhelming "blank piece of paper" feeling. The AI posed questions about goals and objectives, which sparked deep analysis from the team. Working together, they generated a first draft of the plan in less time and with better ideas. The team still does the thinking, Martins insists. The AI is just an amplifier.

But Martins had to establish the rules of the road first. Surprisingly, her governance framework, which included a strict ban on uploading internal documents to public AI tools, had an unintended consequence: "governance paralysis." Adoption varied wildly across her hospitals as a result, from 80% in some to just 30% in others. Why? Staff were too hesitant to use the tools.

Coloring inside the lines: "Ironically, one of our biggest barriers was our own safety policy. It's full of 'don'ts,' so people assumed they couldn't do anything. I have to show them there's a lot you can do safely. You can't put patient details in, but you can say, 'You are an expert discharge nurse. Draft a personalized plan for a 56-year-old who had a hip replacement, has a history of diabetes, and is going home to a small dog with very little support.' People are surprised when they see that. It's about teaching them how to be creative within the guardrails."

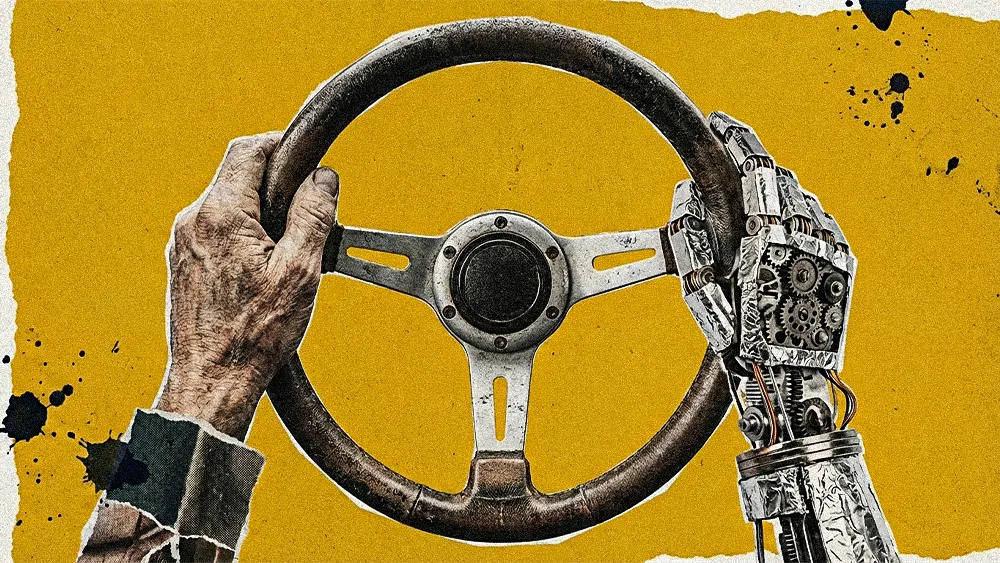

Education is essential, Martins agrees. But she also doesn't see apprehension as a problem. "I would prefer to have that caution than to have people just entering all sorts of things in there without care or attention. The nervousness, I think, is healthy. It's our job to help people understand how to use it."To counter similar concerns that AI might make people cognitively lazy, her message is: "healthy skepticism." For Martins, a blunt understanding of AI's limits is essential. "You have to remember that GenAI does not know the truth. It's a machine giving you a probabilistic answer. It's just saying, 'Based on what you gave me, this is what I think the answer is.' It doesn't know, and it doesn't care if it's true or not."

Meet the new hires: To help her staff understand, Martins tells stories. "I encourage my teams to think of GenAI as a 'very, very junior nurse who is a people-pleaser.' It will do everything to keep you happy and give you the answer it thinks you want. But if you keep giving it information, at some point, it will lose interest and make things up because it wants to complete the task. These models help people remember that the output isn't gospel."

From hype to hygiene: Grounding AI's potential in the tangible, everyday responsibilities of frontline managers also answers the crucial "what's in it for me?" question, she says. "The reality for us is that 90% of our staff are nurses delivering patient care. They're here for that, not to learn 'fancy AI stuff.' Pointing them to generic Microsoft training isn't the answer. We need to make it relevant by using our own real-world examples. A nurse unit manager can ask a GenAI tool, 'How can I improve hand hygiene on my ward?' It can give them a starter plan, suggest ideas they hadn't considered, or even create a quiz for the team. It becomes a practical tool for a real problem, not just abstract technology."

Ultimately, the same technique that sparks creativity is also Martins' most practical defense against fears of human complacency. "It's about training people to use AI to ask you questions to trigger your own critical thinking, as opposed to just prompting, 'Produce me a discharge plan.' Doing so reduces the risk of the final output just being generic slop." In conclusion, she issues a final word of caution about how complacency risk mirrors a well-known clinical phenomenon called "alert fatigue." "We have heart monitors beeping constantly, and eventually, nurses just start clicking the alarm off without thinking. The alerts get ignored. We are absolutely going to go through the same cycle with AI. The models will get better at self-correcting, but for now, the only way to manage that risk is to teach people how to think critically and prompt in a way that keeps them in the loop."