The promise of frontier AI isn’t showing up on the front lines of business. While powerful new models generate headlines, their real-world application, whether through an advanced agentic workflow or a simple chatbot, is often diminished. Sold a seamless pitch, executives don't see the barriers—like a lack of integration with core tools and strict data privacy constraints—that can slow learning, limit trust, and cap impact.

Kristin Gleitsman is a life sciences executive with over a decade of experience and current Fellow at Fellows Fund VC who has spent her career at the intersection of AI and biology. With a track record of scaling discovery platforms at life science giants like Veracyte, Guardant Health, and PacBio, she has a unique perspective on why enterprise AI underperforms. Gleitsman says much of the industry mistakenly focuses on technological solutions for what are, at their core, process problems.

"The gap isn’t about how powerful the models are anymore. It’s about whether organizations know how to integrate them. When AI lives outside real workflows, its value narrows quickly, regardless of how impressive the underlying technology is," says Gleitsman. The thesis was confirmed when Gleitsman led an enterprise-wide generative AI rollout at Veracyte.

Identifying misalignment: To get an accurate view of everyday workflows and the integration challenges they might present, she called for applications from entry-level employees all the way up to VPs. "I intentionally tried to pull in people with various levels of experience and exposure. We had 150 applicants," she recalls. "We took about 50 of them, put them in little learning pods, and rolled it out that way."

Simple wins: The exercise revealed that usefulness had little to do with a tool's complexity. "It does not have to be complicated to be useful. Some of the most useful things that people did were really just getting a very simple prompt, and using it. It’s much better to do enablement from the top down and use cases from the bottom up and then some bridge in the middle around compliance and oversight."

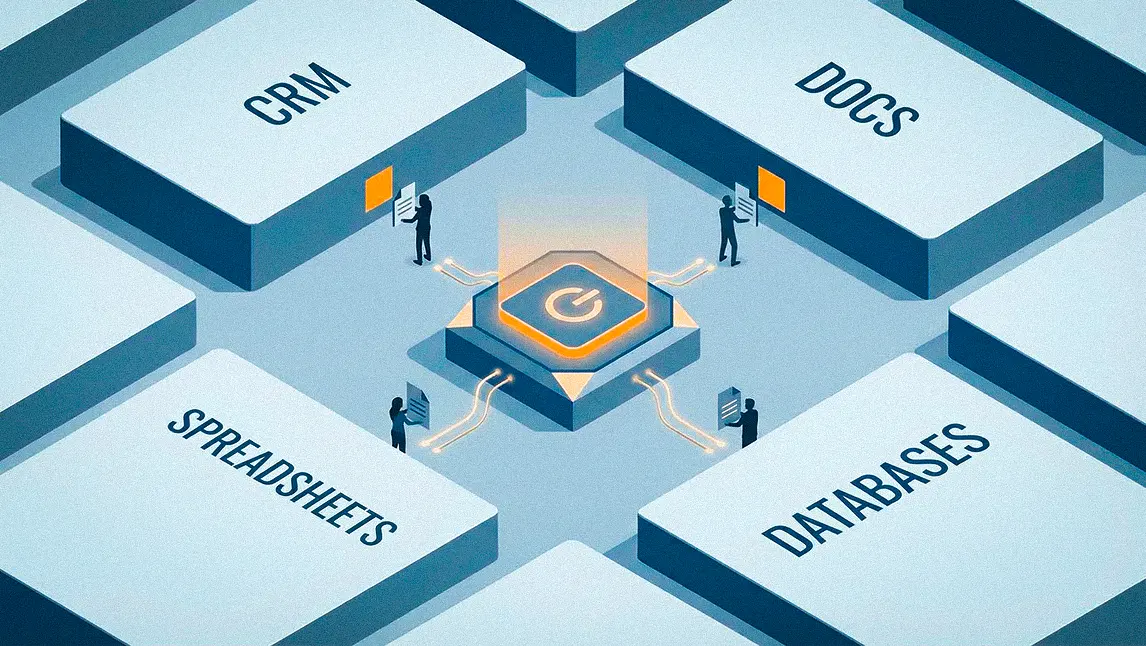

One of the most potent barriers to adoption, Gleitsman says, is a failure of integration. To illustrate, she describes a clunky workaround that, to many, will feel all too familiar. "In one case, we had people who were trying to integrate with an enterprise sales software. They'd have to export a file from the program, import it, and then they could do their work using an off-the-shelf LLM. What would be ideal is lowering that barrier." This need has made seamlessness the defining advantage in the enterprise AI race. Major software industry players are already racing to embed AI where work actually happens, aware that platform dependency can come with long-term financial implications.

In regulated sectors, serious compliance problems can arise when AI is implemented too quickly. Gleitsman explains how some companies are being forced to confront the immaturity of their existing security and governance frameworks as off-the-shelf solutions may lack appropriate safeguards. But the larger barrier is uncertainty—a lack of precedent, shared understanding, and regulatory clarity that makes risk-averse organizations hesitant to deploy AI where it could be most useful. "In life sciences and healthcare, there are real concerns around protected health information, and putting documents that contain it into an LLM still feels very dangerous, especially an off-the-shelf LLM."

Flawed foundation: Gleitsman's critique extends to AI’s role in scientific research itself, noting that the scientific literature these models train on is inherently biased. "When you're training on the corpus of scientific literature, you're optimizing for positive outcomes and missing all the unpublished negative results. The reproducibility crisis is also a real thing, so you are training on an imperfect data set that can't replace scientific discernment." For Gleitsman, this doesn't mean the tools are useless. It means they demand a relationship where human expertise remains the final arbiter.

Lost in translation: Beyond the biases in literature exists a more fundamental mismatch between the architecture of LLMs and the structure of scientific data, a technical reality that requires a deliberate data strategy to manage. “Large language models are trained on human-generated language. Not all systems conform to the structure of human language," she says. "Treating all data as if it were just another language problem introduces systematic risks that organizations must actively manage.”

Identity crisis: Beyond solving these challenges, Gleitsman urges leaders to consider the human and cultural barriers that can hinder adoption. Pushback may be rooted in the psychology behind AI resistance. "Are you automating things that employees find annoying and repetitive? Or are you asking them to automate away parts of their job where they feel a lot of identity and value? People are going to resist that really hard."

To close the gap between AI’s potential and its impact, Gleitsman proposes a new role designed to surface the constraints that hinder modern enterprises and kill technology's real-world value. "We have HR Business Partners. I think right now organizations need AI Business Partners. They would be there to serve the organization, help onboard people to new tools, catch these use cases, and then evangelize that across the organization."

Ultimately, she concludes, rolling out enterprise AI in a way that lives up to the hype requires closing the translation gap between the people who would benefit from AI tools and the software teams tasked with implementing them. "There's a barrier where everything has to be in neat little JIRA tickets as opposed to coming together and really talking about use cases. AI gives us a tool to break down that wall, but you also need people who are willing to lean in on both sides in order to do that."