AI often delivers its greatest value not through flashy moonshot projects, but by taking the busywork off the table. By absorbing repetitive, time-consuming tasks, it gives teams back the space to apply judgment where it matters most: in risk assessment, complex problem-solving, and decision-making that still demands human accountability. As AI moves from passive support to active participation in these decisions, the stakes rise quickly. Questions of governance, oversight, and responsibility move from theoretical to operational, especially in highly regulated environments like banking.

Jason Boova, Chief Compliance Officer at Grasshopper Bank and former U.S. Treasury federal regulator, sees AI’s role through a dual lens of innovation and regulatory responsibility. At Grasshopper Bank, a digital-only financial institution serving small and medium-sized businesses, Boova’s team uses AI to enhance compliance decision-making and streamline workflows while keeping human judgment at the center of every process.

"Capacity is probably the best way to think about whether a use case is working. It’s not just dollars saved. It’s how much more work the same team can do in the same amount of time," says Boova. "By freeing up their time, we gave our team a six-fold return on their bandwidth overnight." A key driver of this success is Grasshopper’s partnership with Greenlite AI, which helped the team achieve a 70% reduction in the time required for Enhanced Due Diligence (EDD). By automating the heavy lifting of data aggregation, the bank scales client intake without diluting oversight quality.

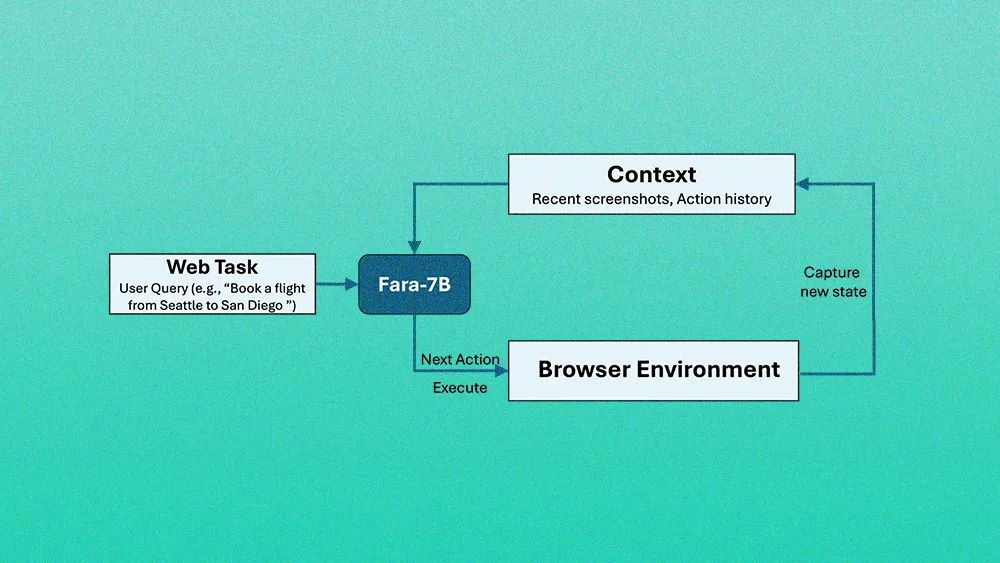

As organizations adopt more sophisticated agentic AI, Boova shifts the conversation from simple value to complex risk. He notes that AI is moving beyond chatbots and basic prompts toward autonomous systems capable of performing complex tasks, such as executing tailored, real-time fraud defense or preemptively rebalancing portfolios. These agents help drive hyper-personalization and compliance effectiveness at scale. However, the key challenge remains around how organizations apply proper governance and validate that an AI agent is working as intended.

Supplement, not supplant: Automating data gathering allows employees to focus on the judgment calls that matter most in managing risk. "We use AI not to make the decision, but to make it easier for people to make the decision," Boova says. "For example, underwriters and account approvers still make the final calls."

A risk-rating reality: For compliance-heavy tasks such as sanction screening, which can involve heavy transaction volumes, a practical risk framework is important. "While AI agents aren't technically models, our industry's model risk management framework is the best tool for risk-rating them," Boova explains. "The agent’s purpose, the data it accesses, and the systems it touches determine its risk rating, which dictates the level of governance required."

In 2025, Grasshopper became the first U.S. bank to deploy an MCP server, allowing business clients to securely query their own financial data using AI assistants. The move signaled a broader shift in how the bank approaches AI governance, building in clear controls, auditability, and human oversight from the start as AI becomes more deeply embedded in day-to-day financial workflows.

Supercharging analysts: AI supports compliance work across the board, from routine monitoring to managing fintech partners to areas such as BSA/AML investigations involving cryptocurrency. By combining AI’s efficiency with human expertise, Grasshopper Bank ensures technology enhances the critical thinking essential for effective compliance. "I want my team to have better access to data, stronger investigation insights, and more ways to investigate issues effectively," Boova says.

Managing the new AI hire: He recommends treating AI like a new employee, using the same human-led governance principles. "You can’t just launch an agent and expect it to solve problems," he says. "You have to train it, give it clear policies and procedures, and compare its output to human work. It’s the same quality check you’d apply to a person." To prevent silos, Grasshopper maintains a cross-functional AI team where anyone can submit an idea, which is then prioritized based on impact and risk. The group meets regularly to align initiatives, ensure clear communication, and enforce cohesive guardrails.

As organizations accelerate their adoption of AI, Boova emphasizes that human judgment remains central to compliance decision-making. With payments and treasury functions becoming increasingly instant and programmatic, the governance frameworks established today form the essential architecture for trust. By anchoring technological advances in human accountability, Grasshopper ensures technology enhances, rather than replaces, the critical thinking required for a secure financial future. "My goal is to make sure our people have the tools to succeed and make good risk decisions," Boova concludes. "AI agents are just one of many tools that support the work they’re already doing."