For leaders deep in enterprise AI adoption, the prevailing mood isn’t excitement. It’s exhaustion. While 2025 may have been heralded as the "year of the agent," for many it has become the year of governance fatigue. Frameworks multiply, new models promise breakthroughs, and orchestration layers stack up, yet little endures. The issue isn’t volume but isolation. AI governance frameworks don’t connect, so they remain theoretical and fail under real-world pressure. As leaders balance innovation and risk, interoperability, not more frameworks, is emerging as the only viable path forward.

Horatio Morgan is an AI Governance and Responsible AI Strategist who has spent his career designing systems to cut through this chaos. Drawing on his experience across governance, risk, and cybersecurity, he argues that the path forward requires a fundamental change in thinking, away from creating more frameworks and toward building the bridges that allow them to interoperate.

That shift, he says, is already happening in practice, even if most organizations don’t realize it. "Organizations are already doing interoperability. We just don’t recognize it, and we haven’t really thought through how it works yet," says Morgan. He contends that the fatigue problem is rooted in the immense difficulty of operationalizing a framework.

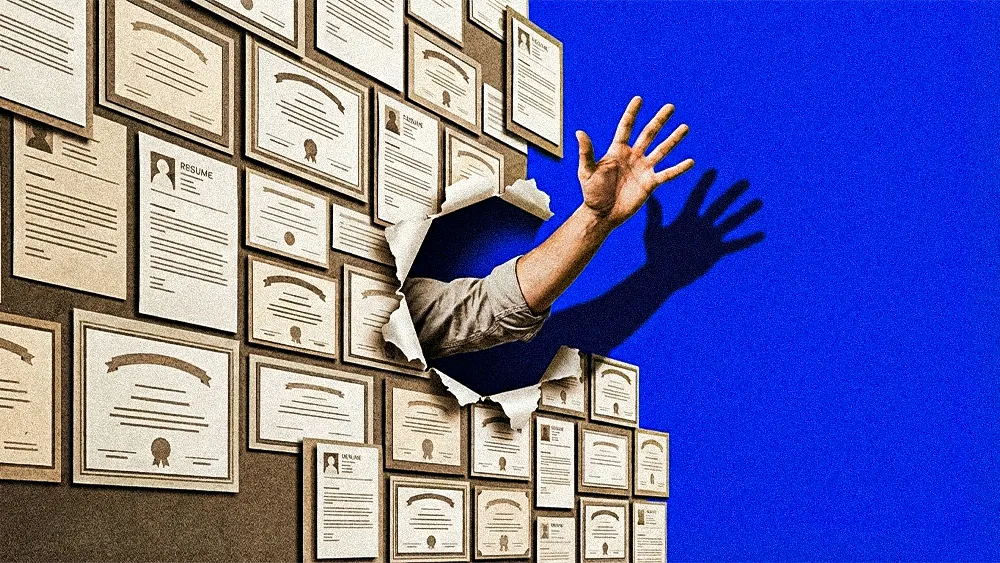

Action over words: For many, this task remains incredibly hard, a reality often masked by how trivially easy it has become to generate the theoretical documents themselves. "Because AI can now write a framework, everybody is writing one," he said. "But a framework just gives you a broad overview; it doesn't provide a pointed direction on how to get things done. The real work comes back to how you act on it."

Paper tigers: "Many framework creators don't know how to build standards into them. You're left with a theoretical document that can't go anywhere," Morgan continues. His solution is a bridge that works between frameworks, translating requirements from standards like SOC 2 and the EU AI Act into a unified model. This system audits policies against core pillars of ethics and bias using measurable KPIs, providing tailored dashboards for both technical specialists and C-suite executives.

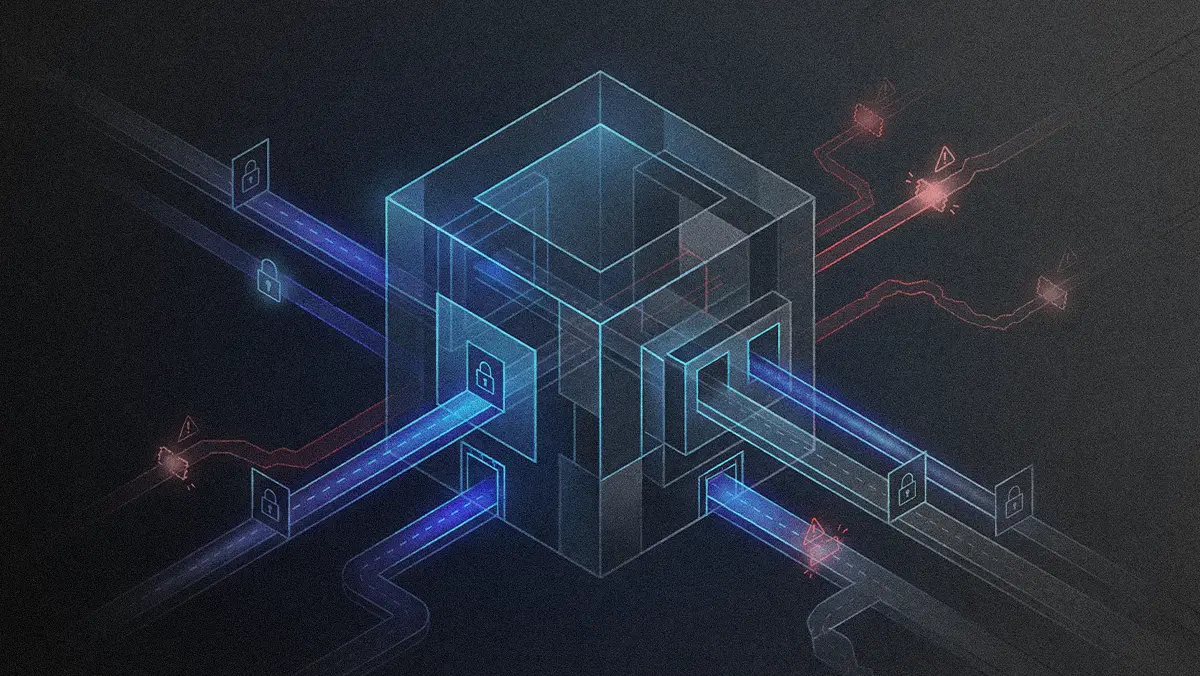

The non-negotiable demands of global commerce are a primary force driving this move toward interoperability, with cleaner compliance emerging as an important secondary benefit. With a growing "policy patchwork," companies are already juggling a tangled web of regulations. The regulatory uncertainty, amplified by reports of a potential delay in the EU AI Act and intense lobbyist pushback, makes a siloed approach harder and harder to sustain.

The price of market access: To thrive, many believe that enterprises should adopt a strategy to "operate once, comply many." Enterprises are consolidating governance through unified GRC models, while rethinking infrastructure choices, including sovereign AI platforms that address jurisdictional risk. At the technical layer, emerging standards like MCP are beginning to provide a shared language that makes interoperability possible across systems. "If you're an enterprise working on the world market, you are absolutely going to need this kind of interoperability. Your product won't go into Japan, Europe, or Brazil if you don't build your system by design. This has to be well thought out from the start," Morgan insists.

Director required: It's a technical challenge that often points to a deeper, organizational one. The "messy" communication and lack of executive buy-in reported in many AI initiatives are frequently symptoms of a leadership vacuum. Morgan explains that companies are trying to fit a new, multi-layered, and cross-functional discipline into old organizational charts, leading to confusion and paralysis. "You still need somebody to run the ship," he says. "You still need an orchestra director."

Beyond interoperability, Morgan outlines several practical considerations for teams working to make AI governance truly effective in practice.

Human at the head: "The human has to be the final decision-maker. AI systems are biased by their training and by the decisions we make around them. It isn’t a flaw, but it’s why human judgment has to stay in the loop to steer the system and keep it accountable." For him, human oversight isn’t optional or symbolic. It’s what keeps governance ethical, explainable, and defensible as AI systems scale.

Translate time to money: Measurement is where many AI programs break down. "You have to define what value actually means to you. If AI saves six hours, what does that translate to in dollars? If you can’t connect time saved to real outcomes, you can’t measure value, and you can’t govern it."

Permission to pause: When AI initiatives stall, Morgan’s first recommendation isn’t acceleration. It’s restraint. "If AI is implemented and the value isn’t there, the first sep is to pause. Reevaluate what 'good' looks like for the organization and whether the technology is actually aligned to that goal."

Crawl, then walk: Governance maturity requires patience, not breadth. "Implementing too much at once makes it impossible to see what’s working. Two or three AI applications is enough. It takes six months to a year to track value properly and understand whether the system is doing what it’s supposed to do."

Hire for the how: Finally, Morgan urges leaders to rethink what AI talent looks like. "People don’t need to know everything about AI to make it work. They need to know how to measure outcomes, and experience transfers. If someone knows how to evaluate performance, like a management analyst, that skill matters more than AI expertise alone."

In the end, frameworks, leadership, and governance aren’t separate challenges. They all hinge on interoperability as the mechanism that turns intent into execution. Without it, frameworks remain isolated, compliance stays fragmented, and explainability becomes impossible to sustain at scale. The ability to trace and justify AI decisions only matters if those insights can move across systems, standards, and jurisdictions. "The challenge now isn’t expanding AI into new areas," Morgan concludes. "It’s building standards that let those areas work together."