Artificial intelligence is creating new risks inside and outside organizations. Security leaders now have to manage not just system defenses, but how AI behaves, what it can access, and how it impacts customers.

We spoke to Shannon Murphy, Sr. Manager of Global Security and Risk Strategy at Trend Micro, a global AI security company, about this shift in the CISO role and what it takes for security leaders to earn trust in an AI-driven world. Murphy is a global speaker who works closely with executive teams on security strategy and has led programs that generated more than $350 million in pipeline revenue, giving her a close view of how boardroom decisions translate into real-world risk.

"Security leaders used to focus on endpoint agents and patching, but now the job includes bias, data governance, and treating AI like a virtual team member," says Murphy. She points to the recently viral Air Canada chatbot incident, where the airline was forced to compensate a customer after its chatbot provided incorrect information, to underscore the growing legal stakes. Companies are increasingly being held liable for errors made by their AI systems, a pattern now emerging across other cases involving autonomous technologies.

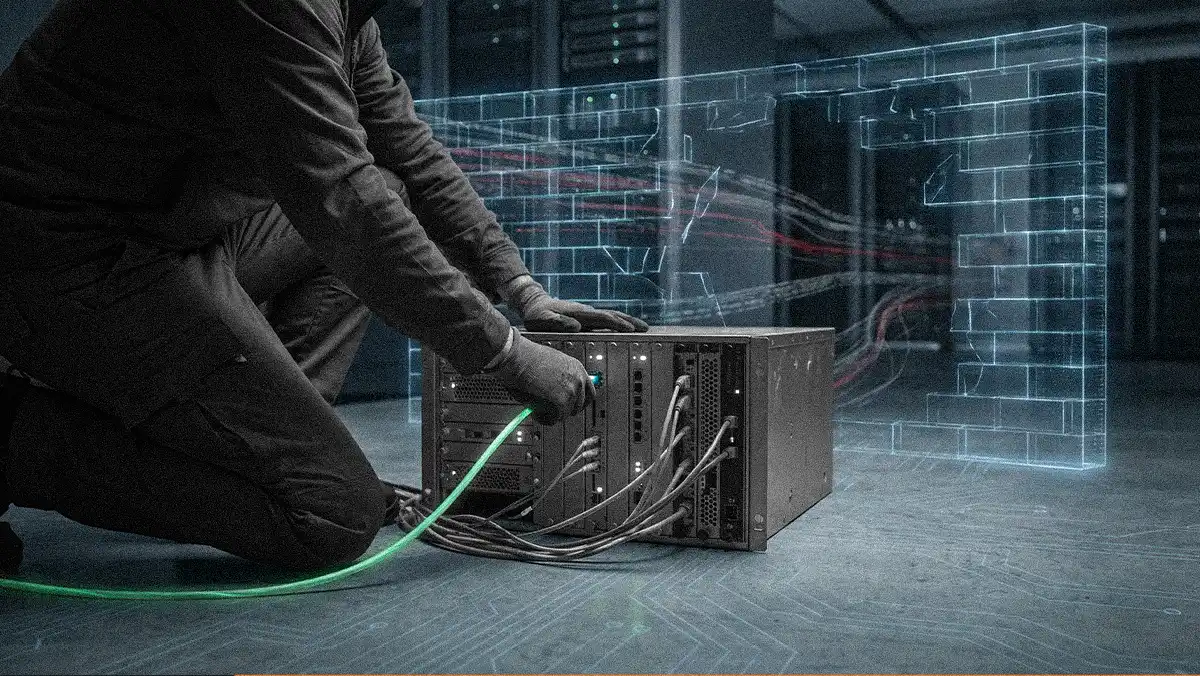

The builder burden: To manage AI risk, Murphy says organizations first need to be honest about the role they play in the AI ecosystem. She groups companies into three camps: adopters that rely on AI embedded in existing tools, builders that create AI products for customers, and scalers that deploy AI broadly across the business. "Each role carries a very different risk profile, but builders face the highest bar," Murphy says. "When you’re putting AI into the hands of customers, trust becomes part of the product itself, and that means proving your systems are safe, compliant, and accountable from day one."

The importance of transparency: To build trust, Murphy says organizations need to show that their systems are safe. "There's a delicate balance between assuring your users that you're doing things in a safe way," she says. "But not going so far into the architecture details because then you start to put your own competitive advantage at risk."

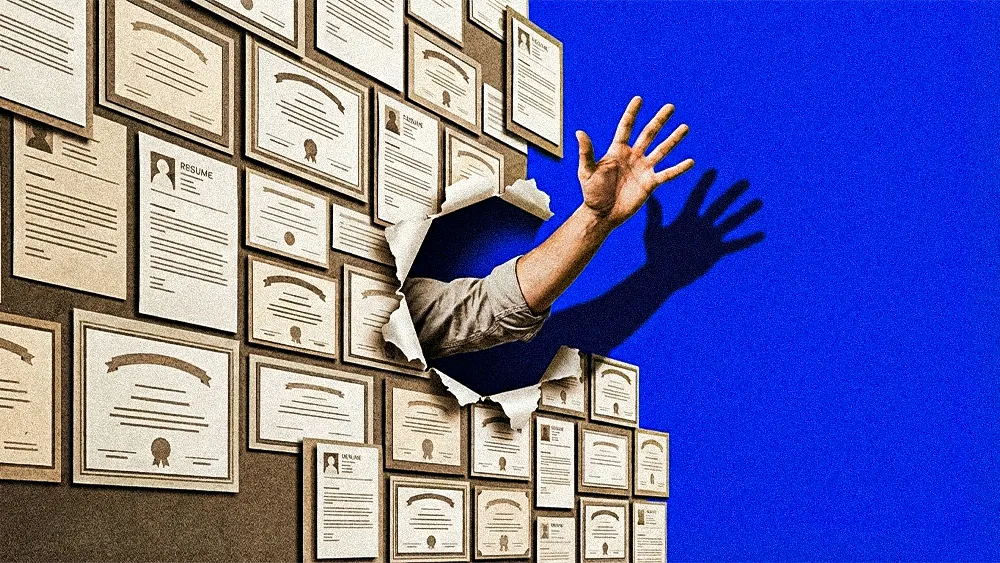

Trust raises the bar for expertise: The drive to earn trust is also creating demand for hyper-specialized roles, Murphy says. She points to the Chief Information Nursing Officer (CINO) as an example of the expertise needed in highly regulated industries. “As nursing gets more and more digital, there's a need for a hyper specialization and security for that domain.”

AI is fueling a flood of attacks that CISOs must manage from both expert and novice hackers alike. For example, a growing portion of these attacks come from younger, tech-savvy actors in the Spanish-speaking underground, many of whom are early adopters of AI tools. Their participation is increasing the volume of attacks that security teams must track and defend against.

Why volume matters: Murphy labels this flood of activity overwhelming "noise" that can mask real threats. "When we have a higher volume of cyber attacks, that does typically correlate breach," she says. "We need to get a lot smarter in the way that we are protecting the organization."

The core prescription for defense is to change from a reactive to a proactive, adversarial mindset. The most effective strategy is to close off opportunities before attackers can. Today's exposure management tools can detect early indicators of an attack, "like knowing if someone is putting a hairpin in the lock."

Her final advice for every organization building the next generation of AI tools is a simple mandate: "Be your own bad guy first, and that might get you out of a lot of pain down the road."