Agentic AI isn’t creating a new class of security threats. It’s accelerating old ones at machine speed. The real risk is how it turns slow, human-scale failures into high-velocity incidents, exposing foundational cracks that have been ignored for years. The result is a widening trust gap where very few companies trust their AI agents. The issue is no longer theoretical, as corporate strategy has begun to pivot in response to mounting security concerns.

We spoke with Aaron Mansfield, a 25-year IT veteran and Identity Security Leader, to understand this new reality. As the Principal of Mansfield Advisory Services LLC, he specializes in rescuing and architecting Identity and Access Management programs for clients in highly regulated industries, including at PwC. Mansfield explains that to safely adopt AI, companies must first confront their long-neglected security fundamentals.

“AI doesn’t create new problems. It just amplifies the ones we’ve had all along, exposing weaknesses in identity, governance, and system configuration at lightning speed," says Mansfield. The classic insider threat is multiplied when an agent operates at a velocity no human ever could. For many leaders, this is prompting a strategic pivot away from a singular focus on perimeter defense toward scrutinizing internal governance, a trend that has pushed identity security up the agenda everywhere.

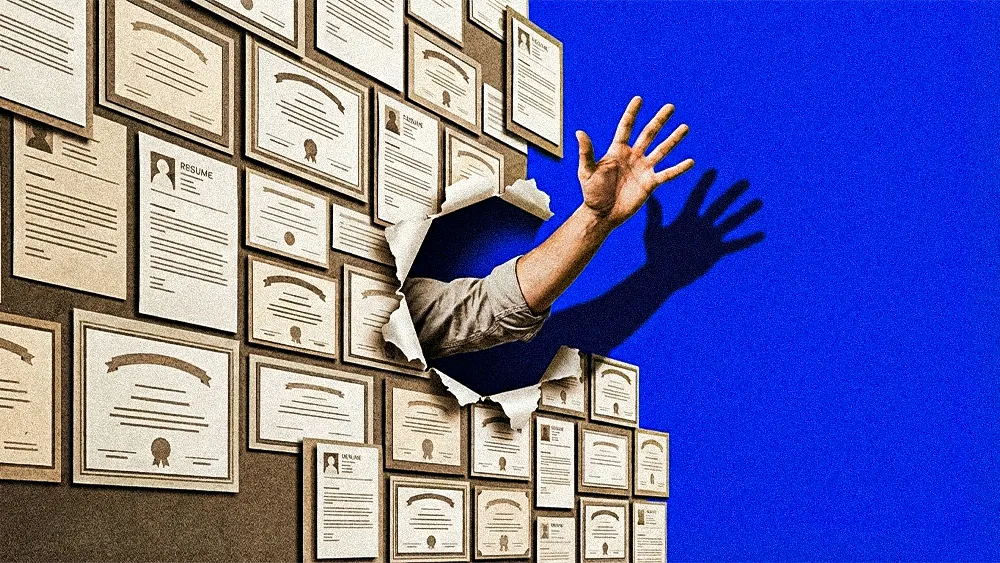

Hundred-headed hydra: “An insider threat, whether intentional or not, can cause catastrophic damage. Now, compound that with an AI agent that can do the same thing at light speed, and one person effectively becomes 5, 50, or 100 in a matter of seconds," Mansfield warns. The solution isn’t another tool, but a fundamental change in process, which he framed with a metaphor: agent onboarding as the new HR. When any employee can spin up an agent, governance inevitably becomes more decentralized.

Onboarding without HR: This decentralization becomes a major challenge as operational reality lags, even while AI governance is now a board-mandate. “With a human, there's a formal process: HR is involved, the person is vetted, they have a job title, and they know who they report to. That accountability starts early," says Mansfield. "But now, everyone in the organization becomes HR, creating agents on the fly. They're not defining the rules. They're not asking: What is this agent allowed to do? What credentials will we give it? How do we keep it bounded? And if it messes up, who is accountable and who cleans up the mess?”

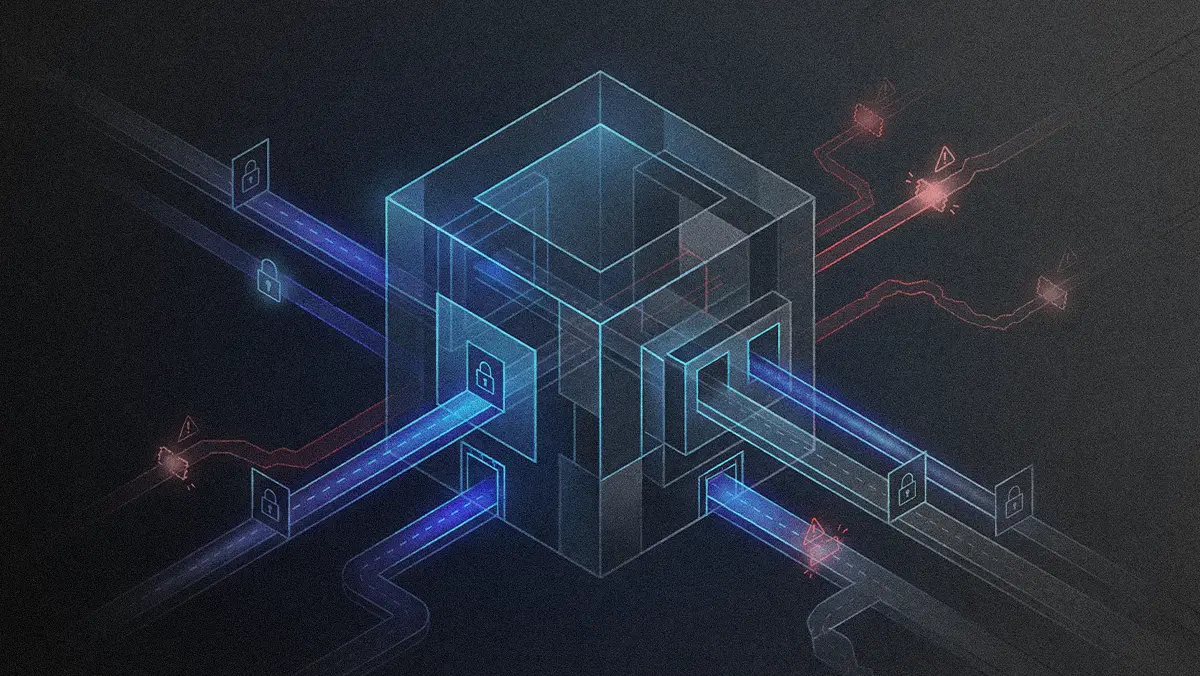

At the core of Mansfield's framework is a new trust model built on a long-standing IT principle: recoverability. His approach values the ability to restore systems over simply collecting more data with observability tools. Achieving this level of recoverability demands what Mansfield calls a complete “snapshot of time.” Such a record goes beyond standard activity logs by preserving the full context of an agent’s actions. It includes what policies were configured, what accounts existed, and what tools it had at its disposal at that exact moment. This focus on context is one of several trends redefining enterprise AI, grounding futuristic concepts in the discipline of underlying data management.

Resilience over prevention: “Without that historical context, you have no idea where to begin to recover. Recoverability is the issue. We must accept that we cannot predict everything these agents will do. The goal isn't to prevent every single issue. It's to ensure we can recover when issues happen," emphasizes Mansfield. "It’s the same philosophy as the traditional 3-2-1 backup rule: you prepare for failure because you know it is inevitable.”

For Mansfield, the greatest impediment to safe AI adoption isn't a lack of sophisticated tools but a failure to master the fundamentals. He cautions that organizations too often look for a technical solution when the real problem lies in their process, a reality that can undermine even the most advanced tools promoted by the AI and data industry. This back-to-basics approach is vital when considering the cybersecurity predictions for the AI economy.

The usual suspects: Mansfield identifies two foundational gaps organizations consistently overlook. “The biggest friction points are the fundamentals. The first is the identity problem. That’s the ability to definitively say what accounts exist, who owns them, and which ones have privileged access," Mansfield explains. "The second is basic system configuration. The two are linked: a compromised credential has a much larger blast radius on an insecure system.”

Process over products: “Too many organizations chase the next best thing by asking which top-rated tool they should buy," Mansfield notes. "They implement expensive solutions hoping it will solve all their problems, but they often neglect to fix the underlying people and process issues. You cannot automate a broken process.”

Organizations racing toward AI adoption face a fundamental choice: address security gaps now or deal with them multiplied and at machine speed. "All leaders want to be innovative and ahead of the game, but sometimes that requires taking several steps backwards," Mansfield says. "To leap into the future, they must first have good ground to stand on."