Most organizations are hitting a wall with AI. During deployment, many discover that their existing infrastructure cannot keep up with its variable demands. Between massive data volumes and "East-West" traffic, AI models can quickly overwhelm traditional networks. Now, some experts say the moment calls for an industry-wide shift from static systems to autonomous environments built for orchestration and continuous inference.

To understand this shift, we spoke with Subha Shrinivasan, SVP and Global Head of Delivery and Customer Experience at Rakuten Symphony. With experience managing CX for more than 15 Fortune 500 clients, including Robin.io before its acquisition by Rakuten, Shrivivasan is an undisputed expert in enterprise transformation. During our conversation, she makes the case for a complete architectural rethink, explaining why the industry must rebuild from the ground up using what she calls the "AI-first data center."

"The concept of a 'fixed-box' data center, where you lock in compute, storage, and networking, is dead. It will be completely obsolete in five years," Shrinivasan says. From her perspective, the economics of AI cannot justify locking expensive GPUs in fixed boxes where they might sit idle.

An economic imperative: Financial realities are pushing the industry toward composable infrastructure, Shrinivasan explains. In this fluid model, resources are pooled and provisioned on demand. "Five years from now, we will be talking about clouds of GPUs spread across various geographies. Orchestration layers will be able to pull in those resources on demand and then release them back into the pool."

But the future is not a simple replacement, Shrinivasan continues. Organizations cannot discard decades of technological investment. The central business challenge is building a seamless pipeline between old and new, because orchestrating this hybrid environment at human speed is impossible.

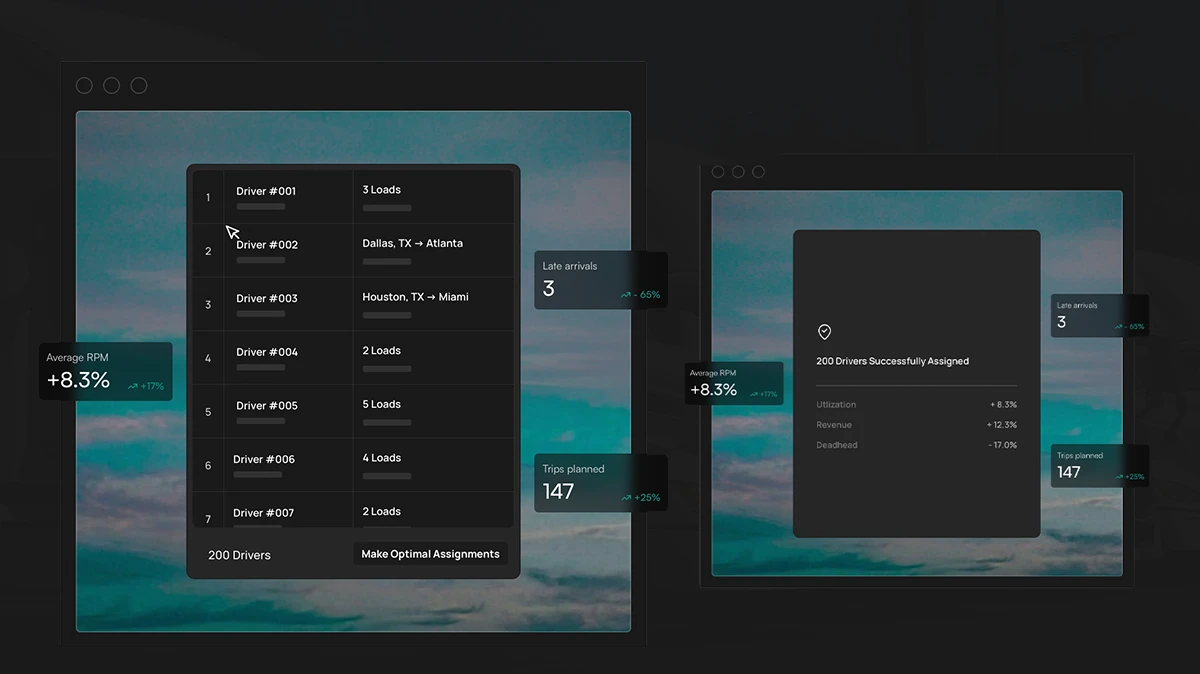

For Shrinivasan, the solution is to build autonomous systems that use AI to manage AI. "AI decides humans cannot catch up. This is where automation and autonomous AI are going to have a huge, huge, huge play." Known as AIOps, the approach creates self-healing networks that fix issues before a human is even aware of a problem. "The goal is to build models so thoroughly trained on failure scenarios that they can sense what to do when something fails and fix it on their own."

Training in simulation: That reliability is achieved using digital twins, or simulated environments where the system learns to handle failure, Shrinivasan says. In this model, the human role evolves from operator to verifier. "The job of a human is just to provide verification to confirm the AI’s proposed action is correct and authorize it to proceed."

The governance gap: Granting this level of autonomy to machines, however, highlights the need for governance. Shrinivasan points to a lack of established certifications for AI infrastructure. She points to the need for frameworks such as the official ISO/IEC 42001 standard for AI management systems. "In the data center space, where data processing is a company's heartbeat, we need a framework on the level of an ISO certification." For her, the stakes are simple: "If you are not compliant, you are out of business."

Access to hardware is a significant obstacle contributing to this vacuum, Shrinivasan explains. Here, she echoes warnings about an emerging AI oligopoly, in which a handful of hyperscalers have preferential access to the entire technology stack. "The most pressing obstacle is that the necessary hardware is unavailable, expensive, and locked within a handful of players. When hardware becomes the bottleneck, you cannot build models or make inferences. This reality puts undue leverage in the hands of a few and places everyone else at a disadvantage."

Ultimately, Shrinivasan’s proposed solution is not to compete directly with these giants. Instead, she sees the hardware bottleneck creating an imperative to dominate the emerging landscape of edge inferencing. In her view, this will become the great equalizer for the rest of the industry.

While hyperscalers continue to dominate massive model training, the future business value for most will be shaped by an explosion of smaller, localized AI, Shrinivasan concludes. "Where all the other industries will pick up is in the inferencing space at the edge—closer to the business, closer to the user. Five years down the line, AI is going to intelligently decide and do things autonomously, helping the business do better and better."