AI strategy doesn’t always follow the Western model of cutting jobs, chasing quick savings, and letting technology take the lead. Around the world, organizations are proving that innovation can strengthen people, culture, and sovereignty at the same time. Of all the approaches, the strongest are those that amplify human skill, protect individual identity, and remain true to their purpose. Taken together, they reveal a bright future where AI is bold, human, and built to last.

For an expert's take, we spoke with Dion Wiggins, Co-Founder and CTO of Omniscien Technologies. A globally recognized authority on digital sovereignty and the former Gartner Vice President, Wiggins is literally writing the book on the subject: a 1,500-page, three-volume tome on digital sovereignty. In his experience, success with AI depends primarily on designing strategies that accurately reflect an organization’s culture, values, and purpose.

The Cherokee Nation perfectly embodies this thoughtful approach, Wiggins says. "This is a glowing case study of how to do it right. A perfect showcase of what enterprises should do," he says. Their strategy builds on existing technology by enriching it with their own data and priorities, he explains. Here, AI is used to strengthen education, language preservation, and community programs, but always with cultural values and sovereignty at the center.

For Wiggins, getting it right extends beyond business strategy, touching on the more profound concept of sovereignty instead. In the digital age, true control comes from owning one’s data and narrative, he explains. A reliance on Western-centric AI surrenders that control, allowing others to define a group’s history, values, and voice.

A tale of two histories: For example, Wiggins describes working with the Grand Cairo Museum. "Using a generic AI would give them a Western version of their own history, when in reality, they have millions of documents that tell the Egyptian version. That is sovereignty: controlling the message. It's not all John Wayne and cowboys. It's the power to define for themselves how they live, what they believe in, and to ensure their story is told in their own voice."

Digital lithium: Just as natural resources shape a nation's power, data now defines its digital independence, Wiggins says. "Sovereignty for different organizations means different things. If a nation discovered a deposit of lithium on its land, that resource would be part of its sovereignty to manage and control. Data is no different. It must be treated as a strategic national asset, the same way we think of oil or any other raw material."

As another example of thoughtful AI adoption, he points to the Singapore government. Here, the country embraces a people-first approach on a national scale. It's proof that investing in human skills pays off. Still, Wiggins cautions that balance is essential. Too many organizations chase speed and savings at the expense of building lasting capability, he explains. The result is a false set of expectations about what AI can actually deliver.

Proof in the productivity: "The Singapore government mandated AI training for every single civil servant. They understood it wasn't enough to simply present the tools without guidance. They implemented mandatory training to teach people how to get the most out of them. As a result, productivity across the board went up 43% in six months."

ROI, oh my: Many companies expect instant ROI from AI, Wiggins explains. But that’s not how real progress works. "You don’t pay for a system and start making a profit in six months. You’ve barely rolled it out. And when organizations rush to replace people instead of developing them, like law firms cutting paralegals, they hollow out their own future. Because those entry-level roles are where expertise begins."

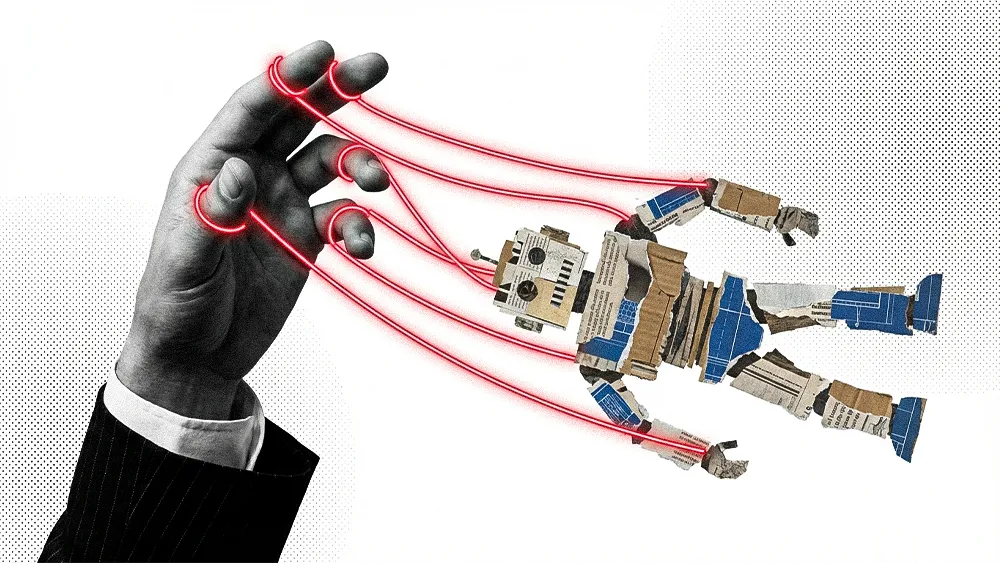

An alternative to the "replacement" model is a philosophy of "amplification," Wiggins says. Here, the goal is to augment human skills. As proof that he practices what he preaches, Wiggins says his own development team uses AI in small, validated slices to accelerate their work by 40%. "Amplifying your people goes far beyond a generic sales call summary. It means using AI to perform sophisticated voice analysis that can identify allies, opponents, and strategies to overcome opposition. It can then generate a detailed report, book the next meeting, and handle dozens of other useful tasks."

But a successful, people-first approach requires a fundamental change in strategy, too. For Wiggins, the future of AI lies in specialization, not scale. "Too many organizations start with a tool and then look for problems to solve." Recalling a recent machine translation competition, he describes how a small, seven-billion-parameter Chinese model outperformed much larger systems. "It was tiny and focused on one task, and that’s exactly why it won. Smaller, purpose-built models are the future."

Eventually, this "problem-first" mindset will yield more effective AI models, Wiggins says. But it's a lesson the West is only now beginning to learn. To be truly sustainable, an AI strategy must start with clarity of purpose, he concludes. "Too many organizations are going the other way around. They start with the tool and then look for problems to solve, rather than starting with their real-world problems to find the right tool. It's a fundamentally flawed strategy."