AI is a double-edged sword for most security leaders: it accelerates development and exploitation alike. Because generative AI models can produce insecure code, threat actors are weaponizing the trend to outpace defenders. Now, most security teams find themselves in the familiar yet dangerous position of playing catch-up to cyberattackers.

At least, that's the assessment from Yotam Perkal, a cybersecurity leader and Senior Manager of Threat Research at Avalor Security, a Zscaler Company. A frequent speaker at cybersecurity conferences such as Black Hat, DEF CON, and BSidesLV, Perkal holds eight patents in vulnerability management and threat intelligence. From his view, AI is becoming both the source of new vulnerabilities and the tool most often used to exploit them.

"AI is compressing the timeline from vulnerability discovery to exploitation. At the same time, it’s helping unearth more vulnerabilities than ever, partly because AI-generated applications are creating a larger, more insecure attack surface to begin with," Perkal says.

The result is a dangerous trust gap, he explains. Here, developers grow a false sense of confidence in code that appears sophisticated but remains fundamentally flawed. "The fundamental gap is that AI models aren't trained to write secure code. Instead, they're trained to write functional code. Security just isn't an incentive in their training process."

Starting at the source: For him, a systemic fix begins upstream, with the labs building the models before the technology reaches end users. "The frontier labs like OpenAI, xAI, and Google must build security into the entire lifecycle, from training and building the models to how they're evaluated before release."

'I just want it to work': Even if users wanted to provide security guidance, most wouldn't know what to ask, Perkal explains. "A security-savvy user might know how to prompt the model for secure code, but most people don't. They wouldn't even know what to ask for. Most users want something that works, and that’s what the model delivers."

In the rush to adopt new technology, outpacing the creation of essential safety infrastructure is a significant risk, Perkal explains. Calling it an "ecosystem immaturity" problem, he contrasts decades of process in software engineering with the newness of AI. "Software engineering has had decades to build an ecosystem with mature processes, tooling, and best practices. We are only three years into the AI revolution, and that entire ecosystem of checkpoints and guardrails doesn't exist yet. That's the core of the challenge."

From state actors to script kiddies: Meanwhile, the technology itself serves a dual purpose for threat actors. "AI lowers the barrier to entry for anyone looking to write malware or exploit vulnerabilities. But in the hands of a capable state actor, it becomes a force multiplier. They know exactly how to point the technology to be far more lethal than a novice just playing around."

An even greater challenge is emerging with autonomous AI agents, Perkal says. As companies rush to deploy agents with real access to enterprise tools and data, they are introducing a far more complex attack surface.

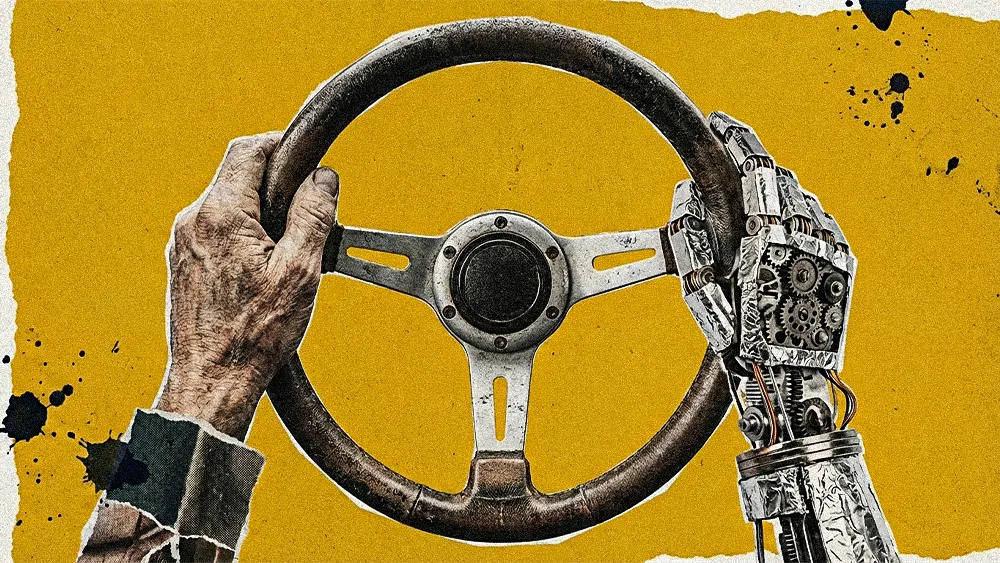

Who's driving this thing?: The most significant risk is autonomy, he explains. "The danger isn't the AI's intelligence. It's the autonomy we grant it without enforcing basic security principles, such as authorization and least privilege. Once you give a nondeterministic system the freedom to act on your behalf, with access to the internet, your tools, and your data, you've introduced a class of risk that must be explicitly modeled."

Profits over protection: The pressure to "keep up with the Joneses" is the core cultural driver of this risk, Perkal continues. Today, it causes many organizations to prioritize speed over diligence. "The motivating business driver is the rush to market, which means security often comes in second, at best."

The weakest link: As they become more integrated into enterprise workflows, these agents will become a prime target, he says. "Attackers always go for the path of least resistance. As AI agents become more common in our workflows, they will become the weakest link. They are harder to defend, and that’s exactly where attackers will focus their efforts."

Perkal’s final piece of advice is a return to a "first principles" approach. Before any tool is deployed, teams must fully understand the new risks it introduces, he says. "Start with the first principle: threat modeling. You must understand exactly what you are introducing into your environment. What can this system do? What could go wrong, and what are the repercussions? From there, you can build the proper validation and testing. You cannot trust AI-generated code out of the box." Ultimately, these are the same risks present in all software, he concludes. "The real danger is neglecting fundamental security practices just because you're dealing with a shiny new AI offering."