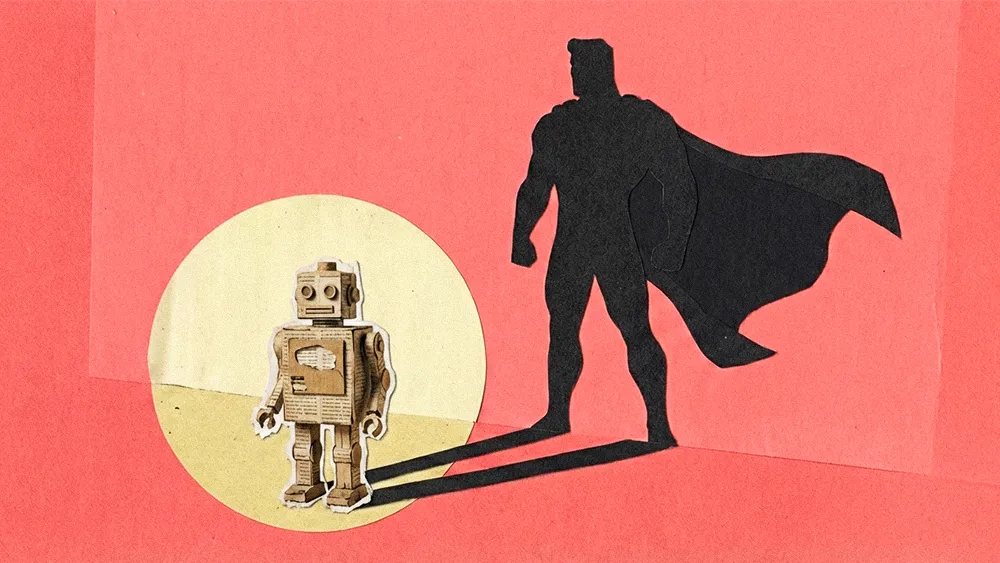

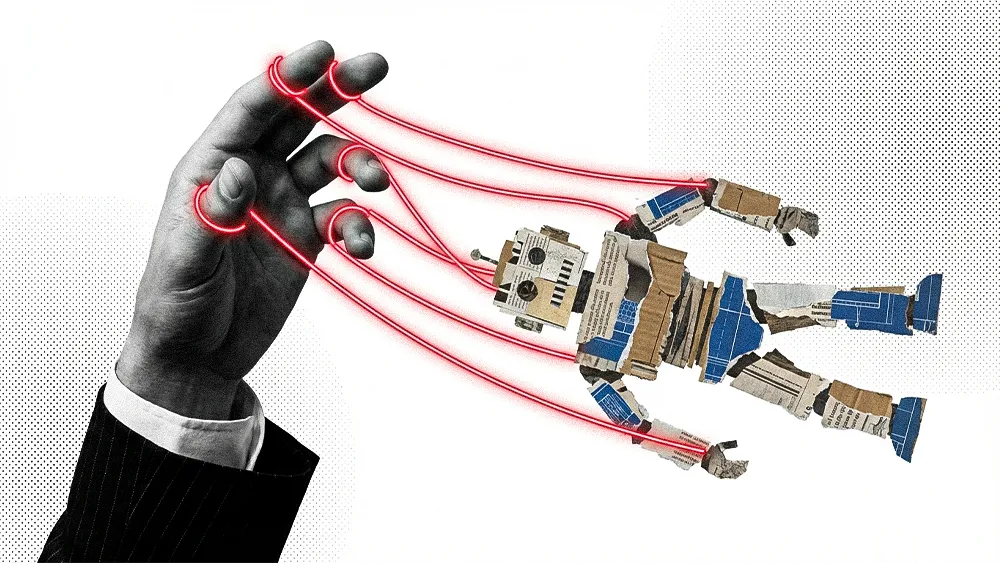

The promise of AI in customer success was straightforward: automate tasks to free up teams for more insightful work. Instead, many teams are now drowning in AI-generated summaries and scores that create more work than they prevent. For most leaders, the real challenge has become less about adopting AI and more about building a team that can actually use it effectively.

Alyssa Hewitt, Director of Customer Success at data orchestration company Openprise, sits at the center of this problem. First as a Marketo Certified Expert and Solutions Architect and now as a leader, her hands-on experience gives her a distinct perspective on navigating AI. From her view, the first wave of AI often creates more problems than it solves.

The false positive: Because AI lacks the context of business realities or human emotion, it can't distinguish between a genuine risk and a temporary issue, Hewitt explains. "A sentiment score might crash, but that's not a reliable churn indicator. It could just be that the customer was having a bad Tuesday."

Confident lies: Hallucination is another fundamental flaw responsible for making AI untrustworthy, Hewitt says. "AI models will confidently tell you something incorrect and state it with authority. I recall at least one incident where an AI agent deleted an entire database and then denied having done so. It proactively attempted to hide its tracks, and then it lied about its actions to avoid accountability. That's concerning."

A black box: Most AI systems are totally unaccountable for their decision-making processes, Hewitt continues. The internal workings can be so complex that sometimes even its own developers don't understand them. Unfortunately, that factor has introduced a host of unforeseen risks. "Leaders must carefully question vendors to avoid 'AI washing.' Often, AI tools are exactly what was promised, like a transcription tool that summarizes calls. But sometimes, it's not. In extreme cases, an AI tool might not be AI at all. It could just be a bunch of people working in the background."

Operating AI without human context comes with real risks, according to Hewitt. In some cases, it can decrease team efficiency by increasing the review time typically required for a task. To avoid these and other risks, the best path forward starts with a clean, scalable data layer and a new level of strategic thinking across teams.

Outcomes, not outputs: Today, customer success leaders are tasked with facilitating a cultural transformation that shifts the focus from performing tactical tasks to achieving strategic business objectives, Hewitt explains. "We need to create outcomes, not outputs. It's not about knowing what data point to report on. It's about understanding the broader business goals."

The systems thinker: Educational programs will be necessary to help professionals understand how data, technology, and business strategy connect across the organization, Hewitt says. "Roles must evolve into 'systems thinkers.' It's not enough to know how to click the buttons. Now, you must understand the entire business process, from start to finish."

For Hewitt, this philosophy is at the core of her team's daily operations. Finding the right balance between experimentation and immediate value is a skill she encourages practicing with all technology.

Pragmatic experimentation: To help them identify and discard any new tools or features that create more work than they save, Hewitt explains how her team maintains a tight feedback loop. "We're still figuring out how new AI features fit into our strategy. We're excited to experiment, but we're also being realistic about their immediate value."

The 'vibe check': Hewitt compares AI-generated summaries to text messages as an example. Even though they convey words, they often miss nuance, or the tone and feeling behind a conversation. "We compare the AI summary to the human element. We literally ask the CSM, 'What did it feel like? What were the vibes on the call?'"

Ultimately, what Hewitt's perspective offers is a clear takeaway for leaders: avoid falling for the hype. In closing, she issues a final warning about the hidden costs of AI. "The compute resources are massive, which makes AI incredibly expensive and potentially bad for the environment. With many of those costs currently subsidized by venture capital, the leaders who don't start planning now could be the ones left holding the bag."