The cutting edge of artificial intelligence is no longer just about building smarter models; it’s about an architectural revolution. Traditional machine learning is being eclipsed by complex orchestrators that can leverage multiple tools and models to perform actions in the real world. But even as these intelligent agents become more capable, they're running headfirst into the intractable walls of security, regulation, and trust. Now, a complex new battleground is emerging for regulated industries like finance.

We spoke with Saurabh Nigam*, a Staff ML Engineer and Manager of Data & AI Platform at Visa. With experience as a senior data engineer at Walmart and deep expertise in the big data and microservice ecosystems that power modern AI, Nigam is on the front lines of implementing these transformative yet challenging new systems. For him, the promise of a fully autonomous agentic future is tantalizingly close, but keeps breaking down at the most critical moment.

Escaping bottlenecks: Nigam pointed to a common frustration that exposes the limits of today’s AI agents. "In a complete experience, the user says, 'Book a flight,' the agent finds the best option, and the booking is confirmed," he explained. "But right now, everything stops at the payment step. The agent pauses and says, 'Please enter your card and complete the payment.' That’s still a roadblock in a critical part of the user experience."

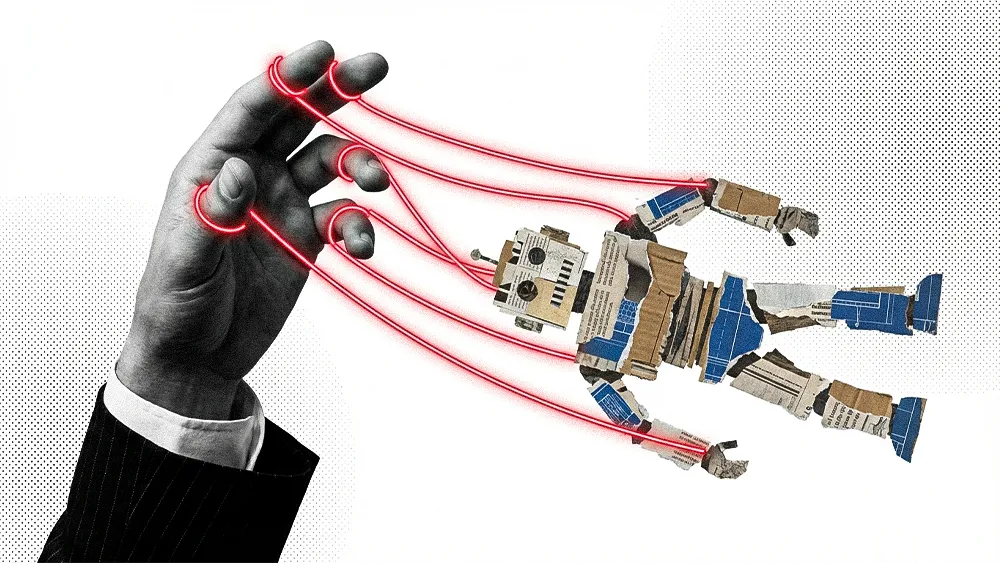

Nigam says this type of distributed responsibility model can create a "trust cluster" where vulnerabilities could emerge anywhere along the chain. It also raises the billion-dollar question for every enterprise: how do you build guardrails around systems that are designed to be autonomous? For most, the solution lies in the data quality of the underlying information that autonomous systems seek to act upon.

Bad data in, chaos out: "It's usually very difficult to see exactly what data is being fed into agentic systems due to the black box nature of LLMs," Nigam stated. "But the reality is, everything depends on the training data. If feed AI agents bad data—which we rarely have full control over—then when the input is garbage, you'll definitely get garbage out."

To address this bottleneck, Visa developed its own MCP server that connects agents directly to transaction processing. Nigam called it a "potential game-changer," but he also stressed that it’s still fragile. If attackers gained access to the agent, they could exploit the system. In enterprise environments, security and regulation are all but essential before such solutions can scale. Here, the double-edged nature of the broader agentic frameworks emerges. Whether it’s Anthropic’s MCP or Google’s A2A, the push to standardize these interactions has created an arms race. And yet, according to Nigam, all face the same unresolved question: where do the true guardrails belong?

A communication channel, not a fortress: "The MCP server is just a means of communication. The one doing the communication, whether you call it a tool, a product, or an agent, should implement the guardrails," Nigam explained. "The MCP server has some levels of security, but it's not the best right now. On both sides, the receiver and the sender should also have proper guardrails to handle their communications."

For Nigam, this challenge is most acute in the fight against financial fraud, which he described as a relentless, escalating war fought by machines on both sides. The threat is no longer a static set of rules to be programmed against, but a dynamic adversary that learns and adapts in real time.

Escaping model drift: "The scammers have improved. And with AI, they’re improving even faster. Now we have AI fighting against AI," Nigam said. "Attackers learned how fraud detection models work and adapted their strategies. It’s a very difficult battle right now." In machine learning terms, this constant evolution—known as "drift"—means a model’s performance is always degrading as it contends with an invisible, intelligent opponent. "It’s neck and neck right now," Nigam said.

*All opinions expressed in this piece are those of Saurabh Nigam, and do not necessarily reflect the views of his employer.